QELAR

From NUEESS

(→Design) |

(→Q-learning) |

||

| Line 14: | Line 14: | ||

== Design == | == Design == | ||

=== Q-learning === | === Q-learning === | ||

| + | [[Image: rl.jpg|right|frame|360*360px]] | ||

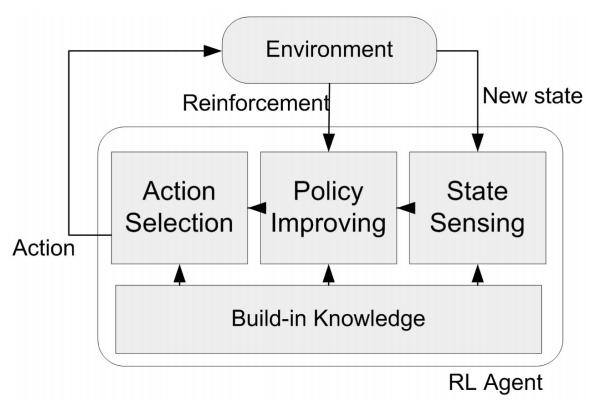

Q-learning is one type of Reinforcement Learning algorithms, by which a system can learn to achieve a goal in control problems based on its experience. An agent in RL chooses actions according to the current state of a system and the reinforcement it receives from the environment. Most RL algorithms are based on estimating value functions, functions of states (or of state-action pairs), which evaluate how good it is for the agent to be in a given state (or how good it is to perform an action in a given state). | Q-learning is one type of Reinforcement Learning algorithms, by which a system can learn to achieve a goal in control problems based on its experience. An agent in RL chooses actions according to the current state of a system and the reinforcement it receives from the environment. Most RL algorithms are based on estimating value functions, functions of states (or of state-action pairs), which evaluate how good it is for the agent to be in a given state (or how good it is to perform an action in a given state). | ||

Revision as of 15:33, 23 May 2011

Contents |

Overview

QELAR is a Q-learning-based energy-efficent and lifetime-aware routing protocol. It is designed to address various issues related to underwater acoustic sensor networks (UW-ASNs). By learning the environment and evaluating an action-value function (Q-value), which gives the expected reward of taking an action in a given state, the distributed learning agent is able to make a decision automatically.

We find that Q-learning is very suitable in UW-ASNs in the following ways:

- Low Overhead. Nodes only keep the routing information of their direct neighbor nodes which is a small subset of the network. The routing information is updated by one-hope broadcasts rather than flooding.

- Dynamic Network Topology. Topology changes happen frequently in the harsh underwater environment. Without GPS available underwater, QELAR learns from the network environment and allows a fast adaptation to the current network topology.

- Load Balance. QELAR takes node energy into consideration in Q-learning, so that alternative paths can be chosen to use network nodes in a fair manner, in order to avoid 'hot spots' in the network.

- General Framework. Q-learning is a framework that can be easily extended. We can easily integrate other factors such as end-to-end delay and node density for extension and can balance all the factors according to our need by tuning the parameters in the reward function.

Design

Q-learning

Q-learning is one type of Reinforcement Learning algorithms, by which a system can learn to achieve a goal in control problems based on its experience. An agent in RL chooses actions according to the current state of a system and the reinforcement it receives from the environment. Most RL algorithms are based on estimating value functions, functions of states (or of state-action pairs), which evaluate how good it is for the agent to be in a given state (or how good it is to perform an action in a given state).

Related Publications

- T. Hu and Y. Fei, “QELAR: A machine-learning-based adaptive routing protocol for energy efficient and lifetime-extended underwater sensor networks,” IEEE Trans. on Mobile Computing, vol. 9, no. 6, June 2010.

- T. Hu and Y. Fei, “QELAR - A Q-learning-based energy-efficient and lifetime-aware routing protocol for underwater sensor networks,” in IEEE Int. Performance Computing & Communications Conf., Dec. 2008.

Simulation Tools

Downloads

Installation Guide

| Whos here now: Members 0 Guests 0 Bots & Crawlers 2 |